How A.I. Magnifies Systemic Bias

Introduction to Artificial Intelligence: How AI Magnifies Systemic Bias

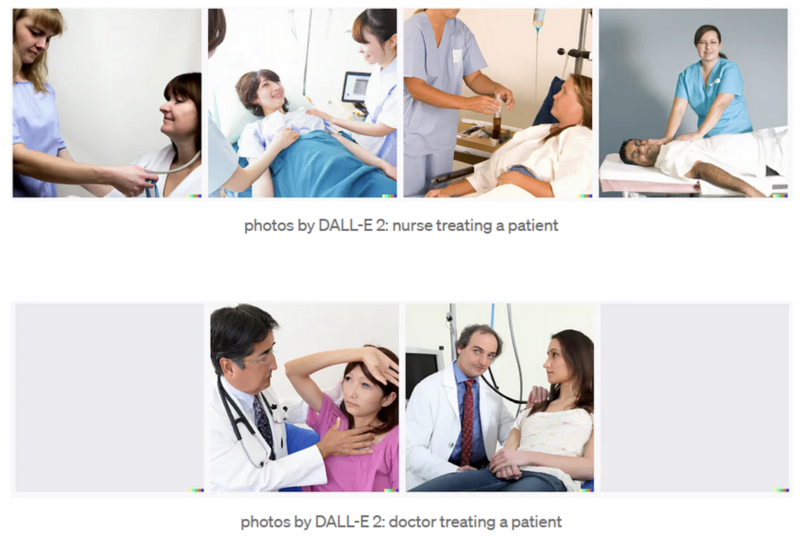

AI is unavoidable, and the societal effects of it are often less than desirable. Over the past few years we’ve seen the rollout of widely available AI tools, including Large Language Models like chatGPT[1], generative art models like Dall-E[2], and some more hidden uses like how we receive targeted ads. When we start to examine these tools, however, we start to notice that these tools don’t work for everyone, and that these tools are biased and magnify negative stereotypes. One clear visual example comes from the release of Dall-E 2, which given an input prompt will generate a representative picture[3]. When prompted to show pictures of different professions long standing gender stereotypes arise: nurses are women, doctors are men, and everyone is white.

AI, Machine Learning in particular, is strong at recognizing patterns. Which means when applied to a society which demonstrates patterns of oppression, it learns to oppress. Biases in AI models have led to wrongful arrests, lost scholarships, loan denials, wrongful cheating accusations, misdiagnosis… And always disproportionately affecting BIPOC individuals, Queer individuals, Women, and People with Disabilities. [4][5]

My focus as an AI fellow was on how we can provide a technical vocabulary and understanding to students outside of the computer science discipline, in such a way that places humanity at the forefront. The goal being to empower students to recognize potential misuse of AI, challenge Technochauvinism — the belief that technology is always the solution [6] — and understand how to keep themselves safe in the post-AI world. Current

Computer Science Electives are structured as paired classroom lectures and practical labs. Each lecture period should focus on a specific topic of AI/ML (i.e. Forecasting, Translation, Language Modelling, Facial Recognition,…) , first defining the task and exploring how human intelligence might solve it, then demonstrating how AI solves it, and finally exploring how the AI is implemented. Discussing how humans solve complex tasks is meant both to serve as a reminder that AI is not the only solution and to critique what elements of human intelligence don’t translate to AI (or what elements of AI improve upon human intelligence). This leads to discussing how the specific technique can be used for good, and counter to that how it might cause harm. The lab periods can then be used to build our own versions of the models discussed, following heavily guided exercises, and exploring where pitfalls and dangers might come in. For example, when designing a forecasting model it is possible to include variables which are proxies for race, gender, sexual orientation, religion,… and then make predictions off of those variables. Google Colab has an existing Fairness AI tutorial, which can serve as a strong starting point.[7]

This can be leveraged to critically discuss topics in the classroom setting, and then highlight potential pit falls and dangers in the lab periods using heavily guided exercises. My proposed goal is not to teach students how to write AI code, but use prewritten code as a means of demonstration and exploration, and to build pathways on communicating about AI, working with AI, and recognizing potential sources of systemic imbalance.

Provided as part of the portfolio is a proposed outline for how this type of course could be run, an example paired Lecture and Lab for the Facial Recognition portion (including Worksheet and Code) as well as a rough primer lecture on AI and ML, which has been adapted from prior work of mine and is not a finished product.

Course Outline

Bodzay outline 420-BWC-AI-Draft

Facial Recognition Series

Lecture:

Worksheet:

Lab:

ML Primer:

Bodzay Dawson AI – Intro to ML

References and Resources

- https://openai.com/blog/chatgpt

- https://openai.com/dall-e-2

- https://medium.com/mlearning-ai/dall-e-2-creativity-is-still-biased-3a41b3485db9

- Broussard, M. (2023). More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech. The MIT Press.

- Benjamin, R. (2020). Race after technology: Abolitionist Tools for the new jim code. Polity.

- Broussard, M. (2019). Artificial unintelligence: How computers misunderstand the world. The MIT Press.

- https://colab.research.google.com/github/google/eng-edu/blob/main/ml/cc/exercises/intro_to_ml_fairness.ipynb